pip install plain

Good news — I managed to get "plain" on PyPI!

Big thanks to the previous owner, Jay Marcyes, for agreeing to transfer the name to me. With that in hand, I feel really good now about how the packages are published and the .com to go with it. I still type "bolt" out of habit sometimes, but I'm getting used to it.

A few other updates:

Rewrite of plain-pytest

The plain.pytest package was originally a fork of pytest-django.

Testing was not my top priority, so it's been a bit of a mess all along. I finally needed to dive in and ended up completely rewriting the package and some of the internal testing utilties that went with it.

A handful of fixtures are all that I've needed to do basic Pytest integration (more fixtures to come, I'm sure).

import pytest

from plain.runtime import settings, setup

from plain.test.client import Client, RequestFactory

def pytest_configure(config):

# Run Plain setup before anything else

setup()

@pytest.fixture(autouse=True, scope="session")

def _allowed_hosts_testserver():

# Add testserver to ALLOWED_HOSTS so the test client can make requests

settings.ALLOWED_HOSTS = [*settings.ALLOWED_HOSTS, "testserver"]

@pytest.fixture()

def client() -> Client:

"""A Plain test client instance."""

return Client()

@pytest.fixture()

def request_factory() -> RequestFactory:

"""A Plain RequestFactory instance."""

return RequestFactory()

You'll notice that database-related stuff isn't here though.

That's because plain.models is a separate package, and you only want those fixtures if you're using it!

After a little wrangling, this turned out to be feasible too by having separate pytest fixtures in that package.

(Sidenote: I think the way pytest fixtures can use yield is pretty cool.)

import pytest

from plain.signals import request_finished, request_started

from .. import transaction

from ..backends.base.base import BaseDatabaseWrapper

from ..db import close_old_connections, connections

from .utils import (

setup_databases,

teardown_databases,

)

@pytest.fixture(autouse=True)

def _db_disabled():

"""

Every test should use this fixture by default to prevent

access to the normal database.

"""

def cursor_disabled(self):

pytest.fail("Database access not allowed without the `db` fixture")

BaseDatabaseWrapper._old_cursor = BaseDatabaseWrapper.cursor

BaseDatabaseWrapper.cursor = cursor_disabled

yield

BaseDatabaseWrapper.cursor = BaseDatabaseWrapper._old_cursor

@pytest.fixture(scope="session")

def setup_db(request):

"""

This fixture is called automatically by `db`,

so a test database will only be setup if the `db` fixture is used.

"""

verbosity = request.config.option.verbose

# Set up the test db across the entire session

_old_db_config = setup_databases(verbosity=verbosity)

# Keep connections open during request client / testing

request_started.disconnect(close_old_connections)

request_finished.disconnect(close_old_connections)

yield _old_db_config

# Put the signals back...

request_started.connect(close_old_connections)

request_finished.connect(close_old_connections)

# When the test session is done, tear down the test db

teardown_databases(_old_db_config, verbosity=verbosity)

@pytest.fixture()

def db(setup_db):

# Set .cursor() back to the original implementation

BaseDatabaseWrapper.cursor = BaseDatabaseWrapper._old_cursor

# Keep track of the atomic blocks so we can roll them back

atomics = {}

for connection in connections.all():

# By default we use transactions to rollback changes,

# so we need to ensure the database supports transactions

if not connection.features.supports_transactions:

pytest.fail("Database does not support transactions")

# Clear the queries log before each test?

# connection.queries_log.clear()

atomic = transaction.atomic(using=connection.alias)

atomic._from_testcase = True # TODO remove this somehow?

atomic.__enter__()

atomics[connection] = atomic

yield setup_db

for connection, atomic in atomics.items():

if (

connection.features.can_defer_constraint_checks

and not connection.needs_rollback

and connection.is_usable()

):

connection.check_constraints()

transaction.set_rollback(True, using=connection.alias)

atomic.__exit__(None, None, None)

connection.close()

So now to write a test that uses the database, you just need to include the db fixture:

def test_a_thing(db):

# This test has a database connection

pass

I know there are more features that Django and pytest-django both offer, and I've intentionally left them out until someone needs them. My hunch is that a lot of those features will never come back.

One thing I'm starting to wonder though is whether plain.pytest should be a separate package,

or if that is actually part of core...

Time will tell.

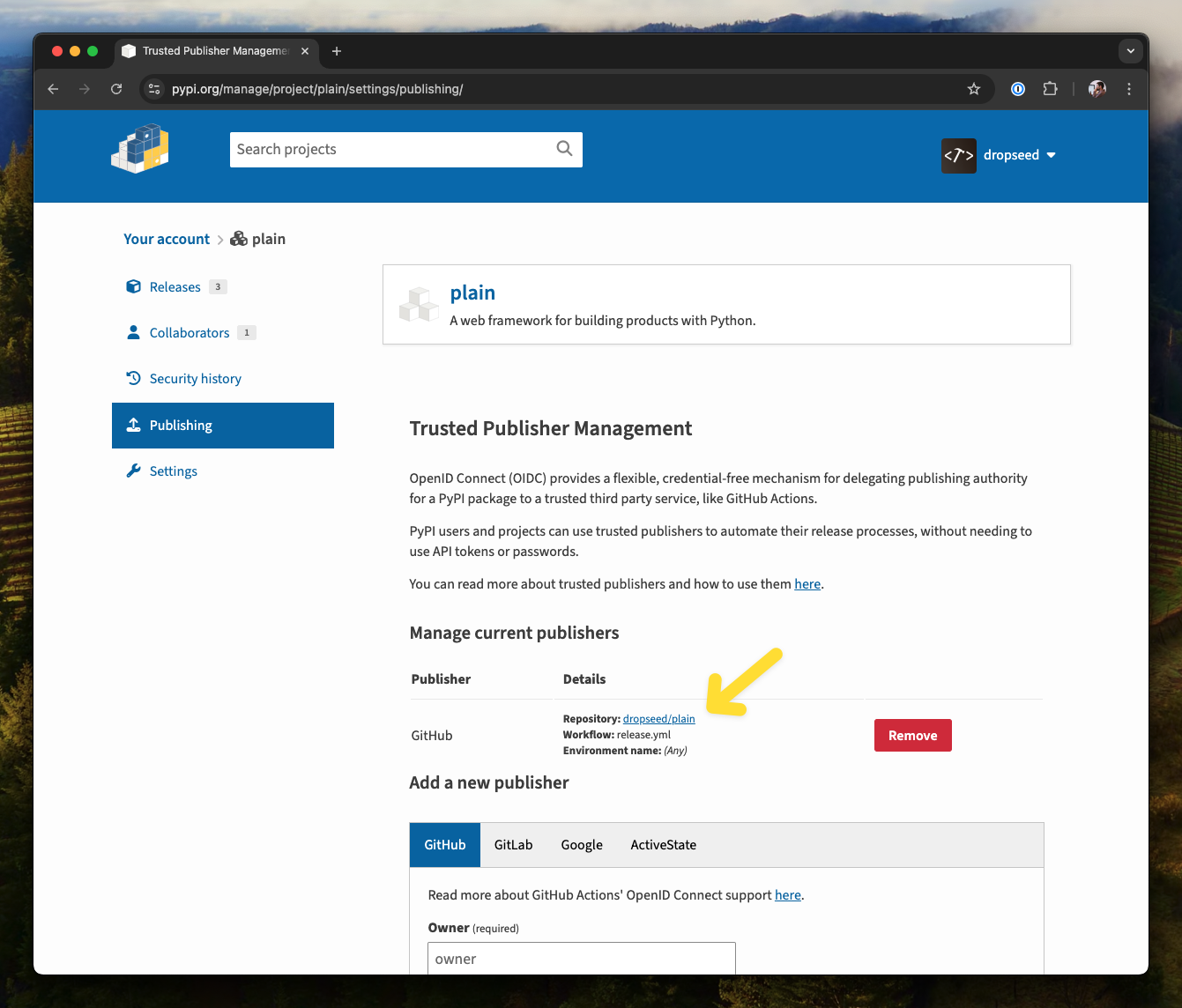

PyPI trusted publishers with Poetry

For years now, I've used poetry publish to publish packages to PyPI.

It's a single command, using the toolchain I also use for dependencies, and it's always worked for me.

Last year, PyPI introduced the concept of "trusted publishers" — which is intended to be more secure than long-lived API tokens. To be honest, I don't know that much about OpenID Connect (OIDC), but dipping my toes in is one way to learn.

Plain is multiple packages, and it works just fine to add the same "trusted publisher" to the settings for each one.

I didn't want to replace poetry publish with the "official" PyPI GitHub Action,

and I eventually found my answer at the bottom of this page.

Mint the token yourself, and pass it to Poetry:

name: release

on:

workflow_dispatch: {}

push:

branches:

- main

jobs:

release:

if: ${{ startsWith(github.event.head_commit.message, 'Release') || github.event_name == 'workflow_dispatch' }}

runs-on: ubuntu-latest

permissions:

id-token: write

steps:

- uses: actions/checkout@v3

- run: pipx install poetry

- uses: actions/setup-python@v4

with:

python-version: "3.12"

cache: poetry

# https://docs.pypi.org/trusted-publishers/using-a-publisher/

- name: Mint API token

id: mint-token

run: |

# retrieve the ambient OIDC token

resp=$(curl -H "Authorization: bearer $ACTIONS_ID_TOKEN_REQUEST_TOKEN" \

"$ACTIONS_ID_TOKEN_REQUEST_URL&audience=pypi")

oidc_token=$(jq -r '.value' <<< "${resp}")

# exchange the OIDC token for an API token

resp=$(curl -X POST https://pypi.org/_/oidc/mint-token -d "{\"token\": \"${oidc_token}\"}")

api_token=$(jq -r '.token' <<< "${resp}")

# mask the newly minted API token, so that we don't accidentally leak it

echo "::add-mask::${api_token}"

# see the next step in the workflow for an example of using this step output

echo "api-token=${api_token}" >> "${GITHUB_OUTPUT}"

- name: Publish packages

run: |

for package_dir in plain*; do

cd $package_dir

rm -rf dist

poetry build

poetry publish --no-interaction --skip-existing

cd ..

done

env:

POETRY_PYPI_TOKEN_PYPI: ${{ steps.mint-token.outputs.api-token }}

Now to release, I just bump the version in any pyproject.toml and commit with Release plain.thing v1.2.3.

The action will just roll through and try to publish everything (no real downsides).

Next priorities towards 1.0

Getting to a "1.0" release is my next major goal. That seems critical if I expect anyone else to use it (or even try it).

Since there are multiple packages, I expect that the core plain package and a handful of other critical ones will hit 1.0 together,

and some of the more experimental ones can stay in a pre-1.0 state for the time being.

But even with that, there is a lot of work to do in finalizing certain APIs and fleshing out more documentation.

There's also a question of how to version the packages...

I go back and forth, but I'm leaning towards having the major version number indicate cross-package compatibility.

So, plain.models==1.5.4 would be compatible plain==1.0.0.

And if plain moves to 2.x, then all packages would move to 2.x as well, regardless of whether they needed major updates.

Then in the GitHub monorepo, there might be a branch for each major version (1.x, 2.x, etc).

The downside is that a package couldn't independently release a new major version though...